Anytime we draw conclusions from statistical inference, other process evidence must support the conclusion. Statistical evidence is only half of the voice of the process. The big picture includes a thorough look at the practical significance of the statistical result.

One area that gives many process improvement teams difficulty is the selection of an acceptance level that is consistent with the reality surrounding the process. There are no hard and fast rules that can help to ensure the selection of the best acceptance criteria. This requires the observation of the process, an evaluation of the business’ objectives, an understanding of the business’ economic realities, and most importantly, the CTQs of the business’ customer base. For example, the acceptance criteria for the safety of an airplane might be set at 0.2 instead of 0.5.

Another problem area is in the interpretation of the statistic result. Since the data creates a picture of the process’ behavior, is this picture consistent with reality? Some important questions to answer are:

Does the statistical result make sense within the process’ current reality?

Does the statistical result point the way to defect reduction?

Does the statistical result point toward a reduced COPQ?

Are there any negative impacts associated with accepting the statistical result?

Does the customer care?

A good data detective will always question statistical conclusions. Performing reality checks throughout the statistical analysis process will help to prevent costly mistakes, improve buy-in, and help to sell the recommendations made by the team.

Practical Significance versus Statistical Significance

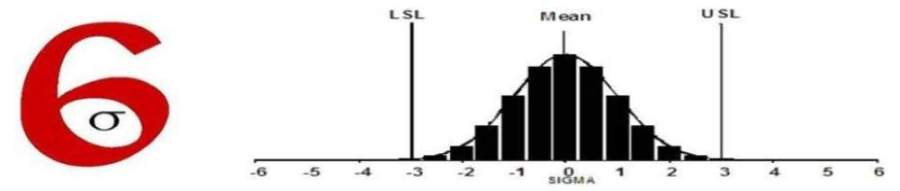

When hypothesis-testing tools are used, we are working with statistical significance. Statistical significance is based upon the quality and amount of the data. Process significance involves whether the observed statistical difference is meaningful to the process.

This can work two ways. First, a statistically significant difference can indicate that a problem exists, while at the same time, the actual measured difference may have little or no practical significance. For example, when comparing two methods of completing a task, a statistically significant difference is found in the time required to complete the task. From a practical standpoint, though, the cycle time difference had no impact on the customer. Either the team measured something unimportant to the customer, or a larger difference is needed to affect the customer.

The opposite is also true. The team can find that the observed time difference from above is not statistically significant, but that there is a practical difference in customer or financial impact. The team may need to adjust the acceptance criteria, collect more data (i.e., increase the sample size), or move forward with process changes.

When statistical and practical significance do not agree, it indicates that an analysis problem exists. This may involve sample size, voice of the customer, measurement system problems, or other factors.